Data Center Basics

Published on April 15, 2023,

by

Understanding data center fundamentals is essential in today’s digital-first world. Data centers form the backbone of modern IT, enabling the storage, processing, and delivery of vast amounts of data. This blog explores the core components, types, and design principles of data centers, helping organizations build secure, scalable, and efficient infrastructure.

Definition and purpose of data centers

Data centers are large facilities that house computer systems and associated components such as telecommunications and storage systems. The primary purpose of a data center is to store, process, and manage the massive amounts of data generated by our increasingly connected digital world. Data centers are the backbone of our modern society, supporting a wide range of digital services and applications, from social media platforms and e-commerce websites to cloud computing and artificial intelligence.

In essence, data centers are the physical manifestation of the internet, providing the infrastructure necessary for the transmission and storage of digital data. They allow organizations to collect, store, and process data on a massive scale, which is critical to their operations and decision-making processes. Data centers are also vital to the functioning of governments and other public institutions, as they provide the necessary infrastructure for critical services such as healthcare, education, and emergency response systems.

Overall, data centers play a crucial role in the functioning of our digital world, enabling the processing, storage, and transmission of data that supports our daily lives and drives economic growth.

Overview of the evolution of data centers

The evolution of data centers can be traced back to the early days of computing, when large mainframe computers required dedicated rooms to house the complex systems. However, it was not until the advent of the internet and the explosion of digital data that data centers became the massive facilities we see today.

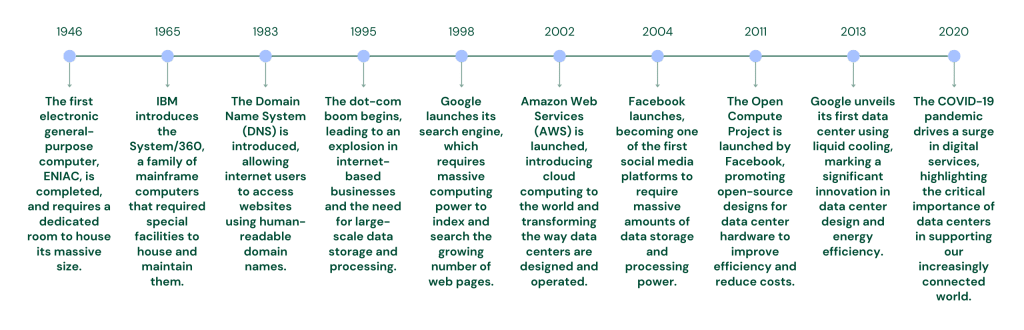

Timeline of significant data center milestones

In the early days, data centers were often owned and operated by individual companies, and their design and layout were tailored to the specific needs of those organizations. As computing power and data storage requirements increased, the need for larger and more efficient data centers grew.

In the late 1990s and early 2000s, the emergence of internet giants such as Google, Amazon, and Facebook led to a new era in data center design. These companies required massive amounts of computing power and storage capacity to support their rapidly growing businesses. They developed innovative designs for data centers that prioritized energy efficiency, scalability, and reliability, leading to the development of the modern data center.

Today, data centers continue to evolve, driven by the need for more efficient, reliable, and sustainable infrastructure to support the explosion of digital data. The adoption of cloud computing and edge computing technologies is changing the way data centers are designed and operated, and innovations such as liquid cooling and renewable energy sources are becoming more prevalent.

Overall, the evolution of data centers reflects the rapid pace of technological change in our digital world, as organizations seek to stay ahead of the curve in managing and processing the massive amounts of data generated by our increasingly connected world.

Different types of data centers

There are several different types of data centers, each designed to meet specific requirements and use cases. Here are some of the most common types:

Enterprise data centers

These data centers are owned and operated by individual companies to support their internal IT infrastructure needs. They are often designed to handle a specific workload or set of applications and are typically located on the company's premises.

Colocation data centers

Colocation data centers are facilities that provide space, power, and cooling infrastructure for multiple tenants to house their IT equipment. They offer a cost-effective way for companies to access high-quality data center infrastructure without the upfront capital investment required for building their own facility.

More about Colocation data centers: What Is Colocation Data Center?

Cloud data centers

Cloud data centers are massive facilities operated by cloud service providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. These data centers provide on-demand access to computing and storage resources over the internet, enabling businesses to scale their IT infrastructure as needed.

Edge data centers

Edge data centers are smaller facilities located closer to end-users and connected devices, often in remote or rural areas. They are designed to provide low-latency access to data and computing resources, which is critical for applications such as streaming video, gaming, and Internet of Things (IoT) devices.

More about edge data centers: What is an Edge Data Center?

Intermediate Distribution Frame (IDF)

An IDF is a type of small-scale data center that is typically used to support the network infrastructure needs of a building or campus. It serves as a distribution point for network cabling and can include equipment such as switches, routers, and firewalls. They are generally smaller than enterprise data centers and are designed to be located closer to end-users to minimize network latency. They may be located on each floor of a building or in multiple locations throughout a campus, depending on the size and layout of the network.

Modular data centers

Modular data centers are prefabricated units that can be assembled on-site or shipped to a location for rapid deployment. They are designed to be highly scalable and can be customized to meet specific requirements, making them an ideal solution for temporary or remote IT infrastructure needs.

Overall, the different types of data centers reflect the diverse needs and use cases of businesses and organizations in our increasingly connected world. Choosing the right type of data center depends on factors such as scalability, cost, security, and performance requirements.

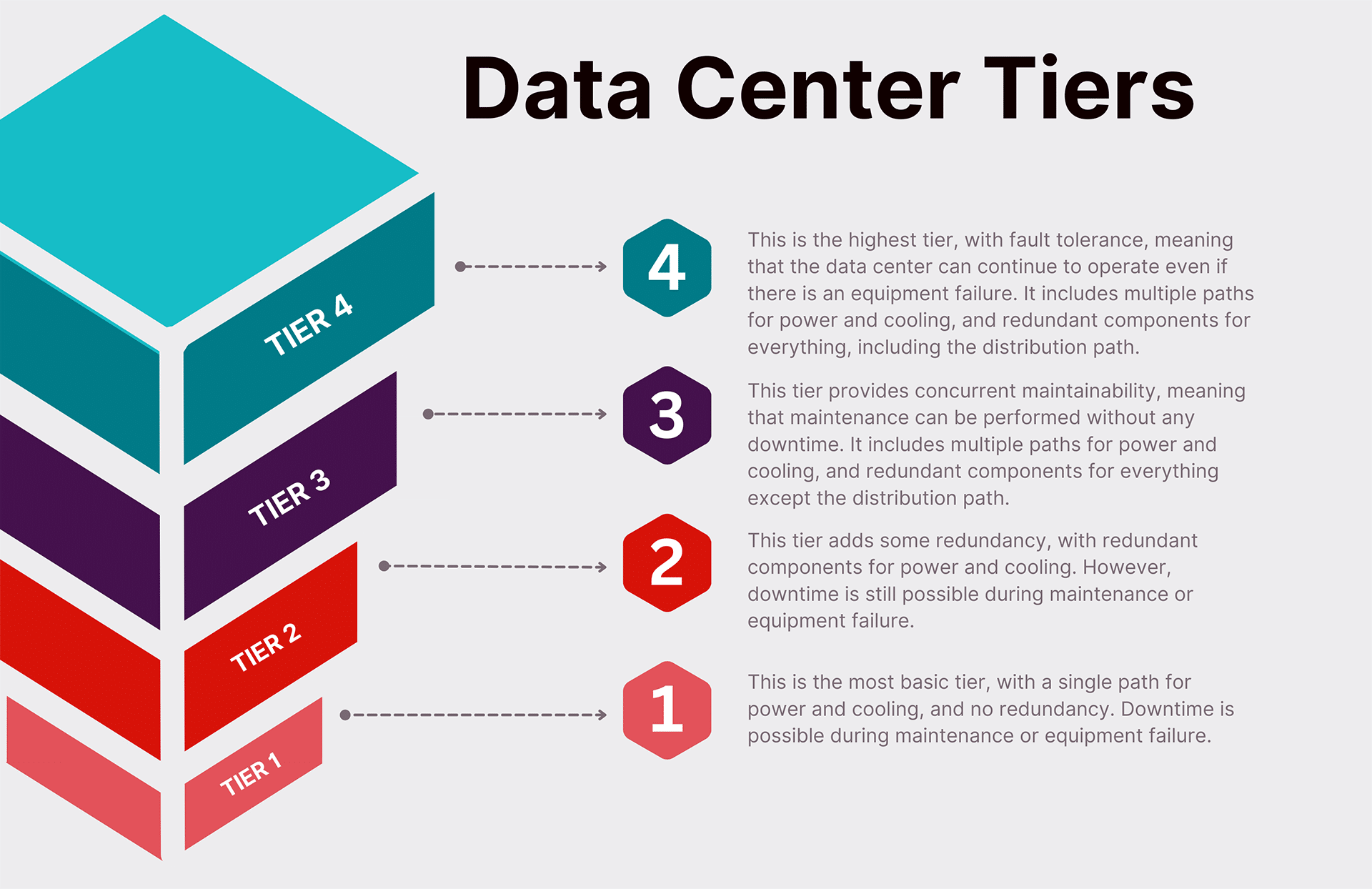

Data Center Tiers

Data center tiers are a standardized system for measuring the reliability and availability of a data center. There are four tiers, with Tier 4 being the highest level of availability and Tier 1 being the lowest. The tiers are defined by the Uptime Institute's data center tiring levels, and are widely used by data center operators and customers.

Each tier requires a higher level of investment and provides a higher level of availability and reliability. The choice of tier will depend on the needs of the business or organization, and the criticality of the services hosted in the data center.

Key factors affecting data center design

Scalability

Data center design must be flexible and scalable to accommodate the changing needs of the organization. As demand for computing and storage resources grows, the data center must be able to expand and evolve to meet those needs.

Power and cooling

Power and cooling are critical components of data center design. High-density computing environments can generate a significant amount of heat, requiring sophisticated cooling systems to maintain optimal temperatures and prevent equipment failure. At the same time, data centers require a reliable and robust power supply to ensure uninterrupted operation.

Security

Data centers contain valuable and sensitive information, making security a top priority in the design process. Physical security measures such as access control and surveillance systems, as well as cybersecurity measures such as firewalls and encryption, must be implemented to protect against unauthorized access and data breaches.

Energy efficiency

Data centers are notorious for their high energy consumption, and the increasing demand for digital services is only exacerbating this issue. Designing energy-efficient data centers is critical to reduce operational costs and minimize the environmental impact of these facilities.

Location

The location of a data center can impact its design and operation. Factors such as proximity to customers, availability of power and cooling resources, and local regulations can all play a role in determining the optimal location for a data center.

Data center layout and construction

Raised floors

Data centers typically have raised floors, which provide space for cabling, cooling, and power distribution. The height of the raised floor is an important design consideration, as it can impact the amount of space available for equipment and airflow.

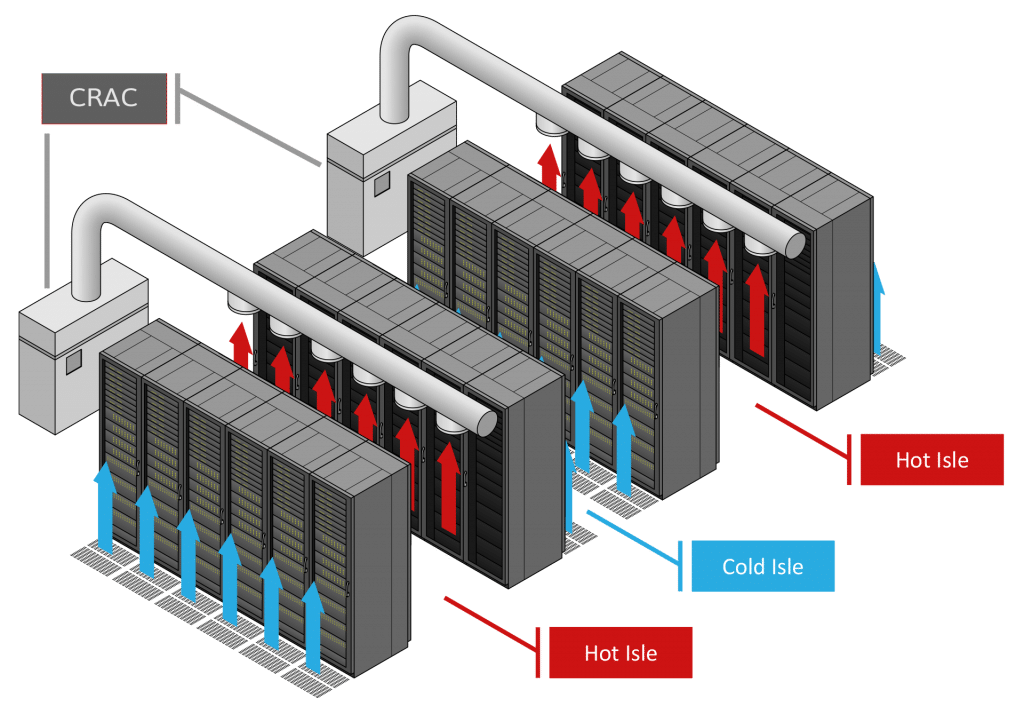

Hot and cold aisles

To optimize cooling efficiency, data centers often employ hot and cold aisle configurations. In a hot aisle/cold aisle layout, the racks are arranged in alternating rows with cold air being supplied through the cold aisles and exhausted through the hot aisles.

Cold isle

In a cold aisle configuration, the front of the servers face each other, creating a cold air intake aisle that is separated from the hot air exhaust aisle behind the servers. This setup ensures that the cool air is delivered directly to the equipment, while the hot air is exhausted away from the servers. This helps to maintain a consistent and cool temperature within the data center, which is essential for the proper functioning of the equipment.

Hot isle

In a hot aisle configuration, the back of the servers face each other, creating a hot air exhaust aisle that is separated from the cold air intake aisle in front of the servers. This setup ensures that the hot air generated by the equipment is efficiently removed from the data center, which helps to maintain a consistent and cool temperature within the equipment and the data center as a whole.

Components of Data Centers

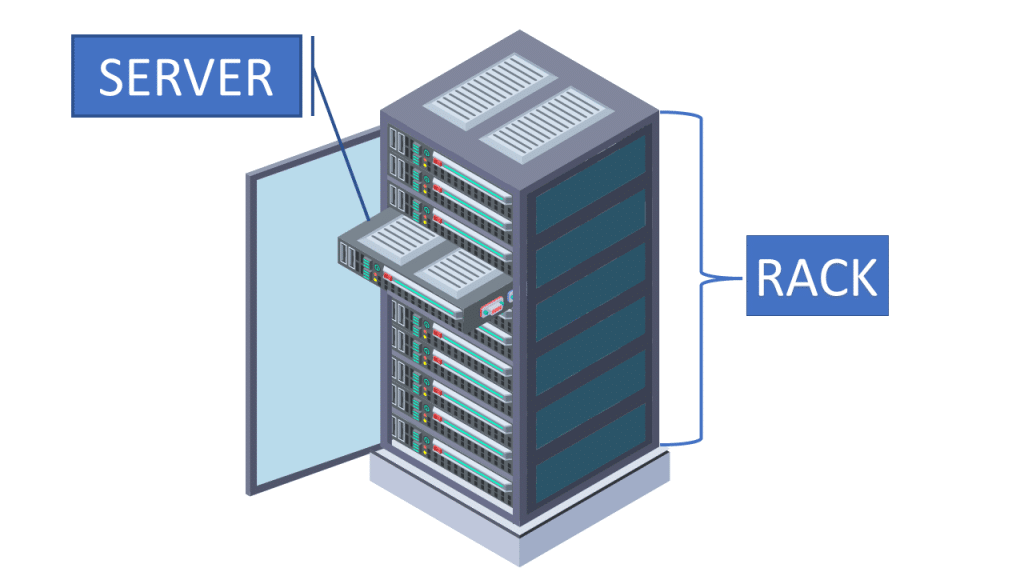

Servers and racks

Servers

Servers are the workhorses of the data center, performing a wide range of computing tasks such as data storage, processing, and network management. These servers come in a variety of shapes and sizes, from blade servers to rack-mounted servers to large-scale mainframes.

Racks

Racks are the framework that holds the servers in a data center. They are typically made of metal and can be designed to accommodate a variety of server sizes and configurations. Racks are designed to maximize airflow and cooling, while also providing easy access to servers for maintenance and upgrades.

Power and cooling systems

Power systems

Data centers require a reliable and robust power system to ensure uninterrupted operation. The power system typically includes the following components:

- Utility power feeds: Data centers typically have multiple power feeds from the utility grid to ensure redundancy and availability.

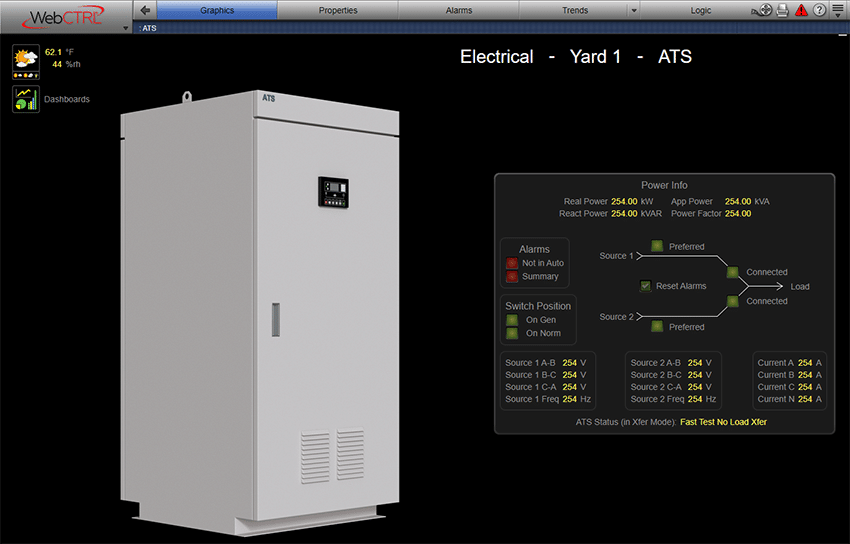

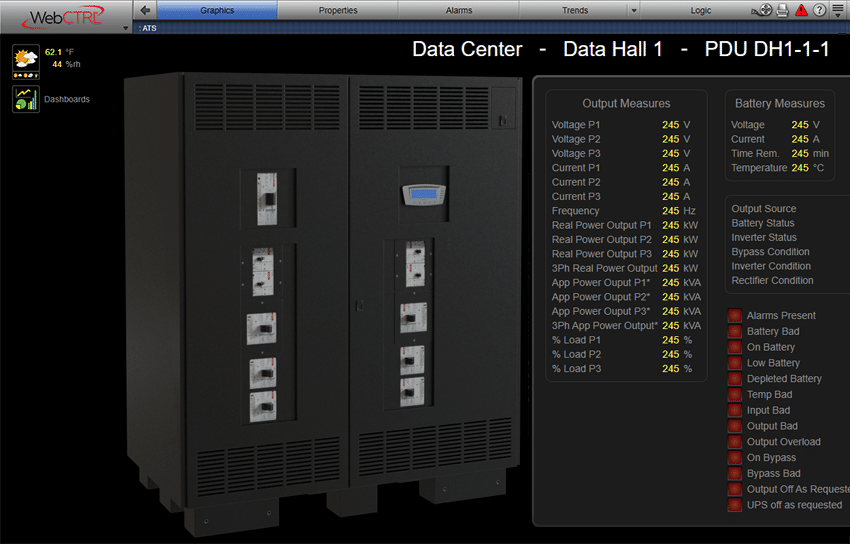

- Automatic transfer switch: A device that automatically transfers power supply from one source to another in the event of a power outage or other power-related issues. It ensures that critical equipment in the data center receives uninterrupted and reliable power supply, helping to prevent downtime and data loss.

- Backup generators: Backup generators are used to provide power in case of a utility power failure or other electrical problem.

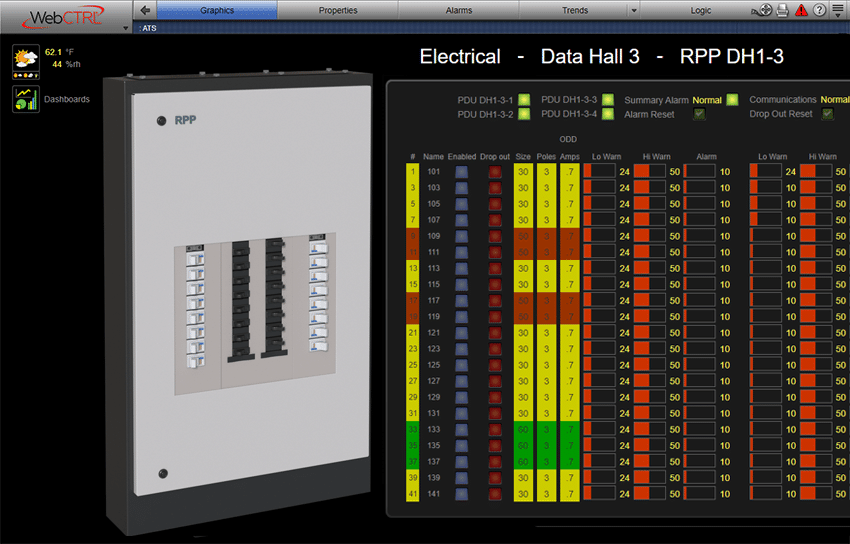

- Remote power panels (RPP): a power distribution unit used in data centers to distribute electric power to multiple devices or equipment. RPPs are typically located in remote or hard-to-reach locations and include multiple circuit breakers or other protective devices that can be individually controlled and monitored.

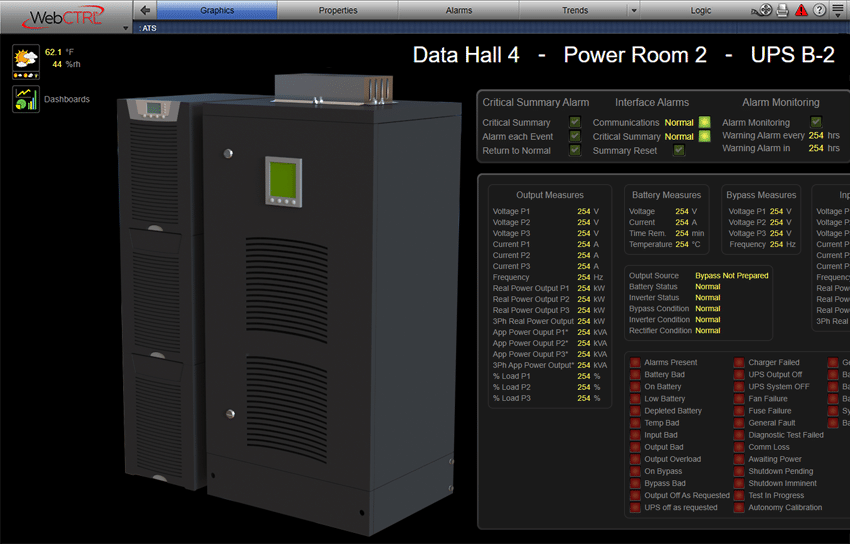

- Uninterruptible power supplies (UPS): UPS systems provide backup power in case of a power outage or other electrical problem. They typically provide power for a few minutes to allow for a smooth transition to backup generators.

- Power distribution units (PDUs): PDUs distribute power from the UPS or generator to the servers and other equipment in the data center.

Cooling systems

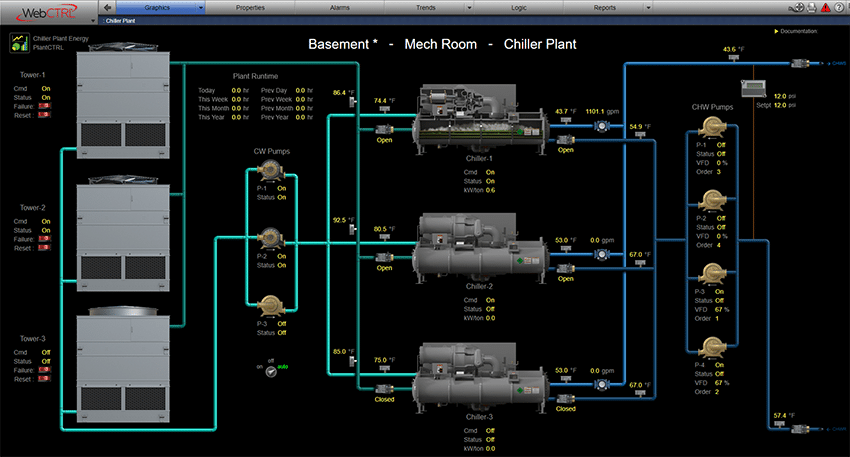

High-density computing environments generate a significant amount of heat, and efficient cooling systems are critical to maintaining optimal temperatures and preventing equipment failure. The cooling system typically includes the following components:

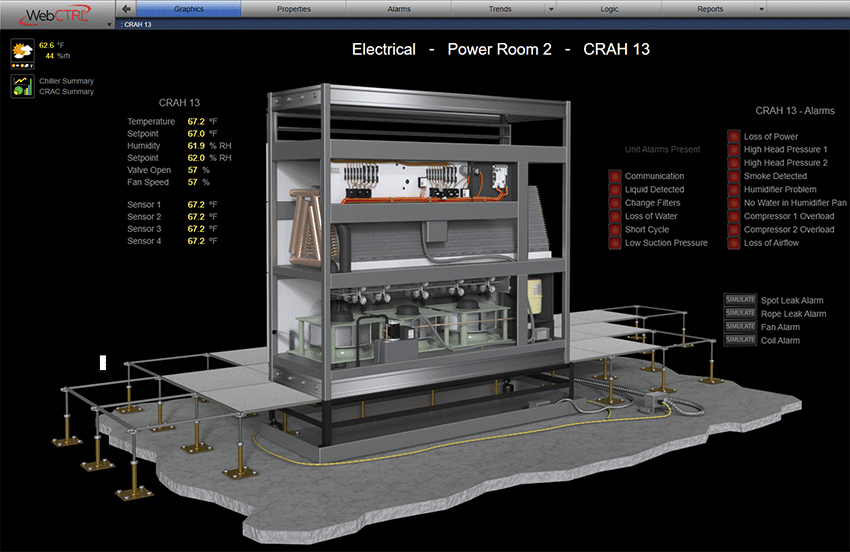

- Computer room air conditioning (CRAC) units: CRAC units are used to cool the air in the data center and maintain a consistent temperature.

- Chiller plants: Chiller plants are used to cool the water used in the CRAC units.

- Free cooling systems: Free cooling systems use outside air to cool the data center, reducing the need for mechanical cooling.

In addition to power and cooling systems, other key components of a data center include servers, storage devices, network switches and routers, and fire suppression systems. By carefully selecting and configuring these components, data center operators can create a reliable and efficient computing environment to support their organization's IT infrastructure needs.

Data storage systems

the most common data storage systems used in data centers are:

Direct-attached storage (DAS)

DAS is a simple storage solution that connects directly to a server, providing fast access to data. However, DAS is not ideal for large-scale data storage, as it lacks the scalability and redundancy of other storage solutions.

Network-attached storage (NAS)

NAS is a specialized storage device that connects to a network, providing a centralized storage solution that is easily accessible to multiple servers and users. NAS devices are typically less expensive than other storage solutions, making them an attractive option for small to medium-sized businesses.

Storage area network (SAN)

SAN is a high-performance storage solution that provides block-level access to data over a dedicated network. SANs are designed for large-scale data storage and can be scaled up to meet the needs of enterprise-level organizations.

Object storage

Object storage is a data storage architecture that uses a flat address space to store data objects. Object storage is highly scalable and resilient, making it an ideal solution for organizations with large-scale data storage needs.

In addition to these storage solutions, data centers may also use tape libraries and cloud storage as part of their overall storage strategy. By carefully selecting and configuring their data storage systems, data center operators can ensure that they have a reliable and efficient data storage infrastructure to meet their organization's IT needs.

Network infrastructure

The network infrastructure is a critical component of a data center, providing connectivity and communication between servers, storage systems, and other devices. Here's an overview of the most common network components used in data centers:

Network switches

Network switches are used to connect servers and storage systems together, providing high-speed connectivity and facilitating communication between devices. Switches are typically categorized by their speed (such as 1 Gigabit, 10 Gigabit, or 100 Gigabit) and the number of ports they have.

Routers

Routers are used to connect different networks together and provide traffic management and routing capabilities. In a data center environment, routers are typically used to connect the data center network to external networks, such as the Internet.

Firewalls

Firewalls are used to control network traffic and provide security by monitoring and filtering incoming and outgoing traffic based on predefined rules. Firewalls are essential for protecting the data center from unauthorized access and cyber-attacks.

Load balancers

Load balancers are used to distribute network traffic across multiple servers, improving performance and availability. Load balancers are especially useful in large-scale data centers where a single server may not be able to handle all incoming requests.

Network cabling

Network cabling is used to connect devices together, providing a physical link for data transmission. In data centers, cabling must be carefully planned and organized to ensure efficient use of space and minimize cable clutter.

Additional Resources

Learn the 6 best practices to design, create and maintain a highly efficient data center, organizations should adhere to.

How green is your data center? The facility's focus is to improve the reduction of its demand on the environment continually.

Should an application remain in your on-premise data center or be migrated to the public cloud? Optimize for cost, performance, and security.

Integrating a Building Automation System (BAS) with a Data Center Infrastructure Management System (DCIM) can provide significant benefits for data center operators, including improved energy efficiency, real-time monitoring and reporting, predictive maintenance, and improved sustainability.

Additional Definitions

ATS stands for Automatic Transfer Switch, which is a device that automatically transfers power supply from one source to another in the event of a power outage or other power-related issues. An ATS is typically installed between the power sources, such as the main utility power and a backup generator or UPS, and the critical equipment in the data center. The ATS continuously monitors the power supply and switches to the backup source within milliseconds if it detects a power outage or a drop in voltage or frequency. This ensures that the critical equipment receives uninterrupted and reliable power supply, and helps to prevent downtime and data loss. ATS devices can also include additional features, such as load shedding, voltage regulation, and surge suppression, to protect the connected devices from power-related issues. ATS devices are essential components of modern data center infrastructure and are critical for ensuring the availability and reliability of mission-critical applications and services.

BAS stands for Building Automation System, which is a computer-based control system that manages and monitors a building's mechanical, electrical, and plumbing (MEP) systems. A BAS typically integrates various components, including HVAC (heating, ventilation, and air conditioning), lighting, security, fire safety, and energy management systems, into a centralized platform. It allows building operators to optimize energy efficiency, reduce operating costs, and enhance occupant comfort and safety. The BAS collects data from sensors and controllers installed throughout the building, analyzes it, and uses the results to automatically adjust and regulate the MEP systems. It can also generate reports, alarms, and alerts to notify operators of any anomalies or issues that require attention. BASs are commonly used in large commercial and institutional buildings, such as office buildings, hospitals, schools, and airports.

CRAC stands for Computer Room Air Conditioning, which refers to the specialized air conditioning system used to regulate temperature, humidity, and airflow in data centers and other critical IT facilities. The CRAC system is designed to maintain a stable and controlled environment to ensure the optimal performance and reliability of servers, storage, and networking equipment. It typically includes precision cooling units, air handlers, ductwork, and monitoring/control systems to deliver conditioned air to the equipment and remove heat generated by the IT loads. The CRAC system is a crucial component of modern data center infrastructure, as it helps to prevent equipment overheating, downtime, and potential data loss.

CRAH stands for Computer Room Air Handler and refers to a type of air conditioning unit used in data centers. A CRAH unit is designed to regulate the temperature and humidity of the air within a data center by circulating cool air through the space and removing hot air generated by the computer equipment.

CRAH units are typically installed in a raised floor environment, where they draw in warm air from the data center and pass it over a cooling coil to remove the heat. The cooled air is then distributed back into the data center to maintain a consistent temperature and humidity level.

CRAH units are an essential component of modern data center infrastructure and are designed to operate with high efficiency to minimize energy consumption and operating costs.

DAS stands for Direct-Attached Storage, which is a storage architecture that connects storage devices directly to a server or a workstation, without using a network. DAS devices can be internal or external to the host device, and they can use different types of interfaces, such as SCSI, SATA, SAS, or USB, to communicate with the host. DAS is a simple and cost-effective storage solution that provides high-speed access to data and is easy to install and manage. However, it has some limitations in terms of scalability and data sharing, as DAS devices can only be accessed by the host device to which they are directly attached. DAS is commonly used in small businesses, workstations, and entry-level servers, as well as in some specialized applications, such as digital audio and video editing.

DNS stands for Domain Name System, which is a decentralized naming system used to map human-readable domain names to the IP addresses used by computers and other devices to identify each other on the Internet or other networks. DNS allows users to access resources, such as websites, email servers, or other network services, using simple and easy-to-remember domain names, instead of using complex and hard-to-remember IP addresses. When a user types a domain name into a web browser or other application, the DNS system resolves the domain name into the corresponding IP address by querying a series of hierarchical and distributed DNS servers. DNS servers store and propagate information about domain names and their corresponding IP addresses using a hierarchical naming scheme, which starts with the root domain and extends to the top-level domains (TLDs), second-level domains, and subdomains. DNS is a critical component of the Internet infrastructure and plays a key role in enabling the connectivity and interoperability of millions of devices and services worldwide.

IDF stands for Intermediate Distribution Frame. An IDF is a network connectivity device that is used to connect network devices such as servers, switches, routers, and other network equipment within a building or campus. IDF is typically installed on each floor or in different sections of a building to provide connectivity to devices located in that specific area. It is connected to the main distribution frame (MDF) or the core switch that connects all the IDF closets together. The purpose of the IDF is to provide a more localized connection point for network devices, reducing the amount of cabling required to connect devices to the MDF or core switch, and simplifying the management and maintenance of the network infrastructure.

DCIM stands for Data Center Infrastructure Management. The software solution provides centralized management and monitoring of a data center’s IT and physical infrastructure. DCIM allows data center operators to monitor, analyze, and manage various aspects of the infrastructure, including power and cooling systems, server utilization, capacity planning, and environmental conditions. DCIM provides a holistic view of the data center’s performance and helps operators make informed decisions on optimizing energy efficiency, reducing waste, and ensuring business continuity. The software typically includes real-time monitoring, reporting, and analysis tools and automation and integration capabilities with other data center systems. DCIM is an essential tool for managing modern data centers that are becoming increasingly complex and demanding regarding energy efficiency, reliability, and sustainability.

MDF stands for Main Distribution Frame. A MDF is a central point in a telecommunications room or data center where all the incoming communication lines are terminated and connected to the network devices. The MDF acts as a central hub for all the data and voice communication lines, including telephone lines, internet lines, and local area network (LAN) cables. From the MDF, the communication lines are then distributed to other network connectivity devices, such as Intermediate Distribution Frames (IDFs) located on each floor or area of a building. The MDF is typically used in large-scale enterprise networks and serves as the main termination point for all the communication services that are required for the network to function effectively. The MDF is usually managed and maintained by the IT staff responsible for managing the network infrastructure of an organization.

NAS stands for Network Attached Storage, which is a file-level storage technology that enables multiple devices to access shared storage resources over a network. A NAS device is a dedicated appliance that contains one or more hard drives, and is connected to a local area network (LAN) or a wide area network (WAN). It uses file-level protocols, such as Network File System (NFS) or Server Message Block (SMB), to enable clients to access files and folders stored on the NAS device as if they were stored locally on their own devices. NAS devices typically offer features such as data encryption, backup and recovery, remote access, and media streaming. They are commonly used in home and small office environments, as well as in enterprise environments, for storing and sharing files and for backup and disaster recovery purposes.

PDU stands for Power Distribution Unit, which is a device that distributes electric power to multiple devices or equipment within a data center or other IT environment. A PDU is typically installed in a rack or a cabinet and connects to a power source, such as a UPS or a generator. It includes multiple outlets, each of which can be individually controlled and monitored. PDUs can be classified into different types based on their functionalities, such as basic PDUs, metered PDUs, switched PDUs, and intelligent PDUs. Basic PDUs provide only power distribution, while metered and switched PDUs offer additional monitoring and control features, such as current monitoring, remote outlet control, and power usage reporting. Intelligent PDUs provide advanced features, such as environmental monitoring, automated load shedding, and remote management via a web interface or a network management system. PDUs are essential components of modern data center infrastructure, as they help to optimize power usage, reduce energy costs, and ensure the availability and reliability of critical IT equipment.

RPP stands for Remote Power Panel, which is a power distribution unit that is typically located in a remote or hard-to-reach location within a data center or other IT environment. An RPP distributes electric power to multiple devices or equipment within a data center or other IT environment and includes multiple circuit breakers or other protective devices that can be individually controlled and monitored. RPPs can be designed to support high-density server environments and can provide up to 400 amps of power distribution in a single unit. RPPs are important for simplifying and optimizing power distribution in the data center and can be integrated with other power management systems, such as power monitoring, energy management, and capacity planning tools, to optimize energy efficiency and reduce costs.

SAN stands for Storage Area Network, which is a specialized network architecture that provides high-speed access to block-level data storage. A SAN typically consists of servers, storage devices, and switches that are interconnected using fiber optic or copper cables. It enables multiple servers to access shared storage resources, such as disk arrays, tape libraries, or solid-state drives, as if they were directly attached to each server. This allows for centralized storage management, improved data availability, and simplified data backup and recovery processes. SANs are commonly used in data centers, where they provide a scalable and reliable storage infrastructure for enterprise applications, databases, virtual machines, and other critical workloads. They are also used in video surveillance, scientific research, and media production environments, where high-bandwidth, low-latency storage access is essential.

UPS stands for Uninterruptible Power Supply, which is a backup power system that provides emergency power to critical devices or equipment in the event of a power outage or other power disruptions. A UPS includes a battery or other energy storage device that provides power to the connected devices for a certain period, typically from a few minutes to an hour, depending on the load and the capacity of the UPS. UPS devices are commonly used in data centers, hospitals, industrial facilities, and other critical applications that require continuous and reliable power supply. They come in different types and configurations, such as standby UPS, line-interactive UPS, and online UPS, each of which offers different levels of protection and efficiency. UPS devices can also include additional features, such as surge suppression, voltage regulation, and power conditioning, to protect the connected devices from power spikes, sags, and other power-related issues.